WANT SCHOOL BLACK IN YOUR INBOB? Sign up for our alestal newsletters for only to receive is the most true for prison ai, data, and security guards. Subscribers now

If the industry eventually in the summer’s summer would go to the Albaba

About just the last week, the border model ai research division of Chinese E-Commerce did not release the beholdoth onenot twonot threebut but Four (!!) New Open Source Generative General Ai Models that offer the setting of the setting benchmarks is still a few leading ownership options.

Last night, Qweeen team has it with the release of QWEEN3-235B-A22B-thinking-2507Is it updated to reply large language model (llm).

Cites, a new Q33-think afternooning -07, and we call short, now now call you of unall hydwee.

AI IMPACT series will go to San Francisco – 5. August

The next phase of AI is here-are you ready? Join chiefs from blocks, Gsk, and sap for an exclusive look, like autonomous agents to do the recovery settings – of real-time-tivtivation.

Secure your place now – space is limited: https://bit.ly/3gfffllff

As ai influence and news aggregator Andrew Curran wrote to x: “QWen is the Strengths Arrived his reasoning model, and it is in the border.”

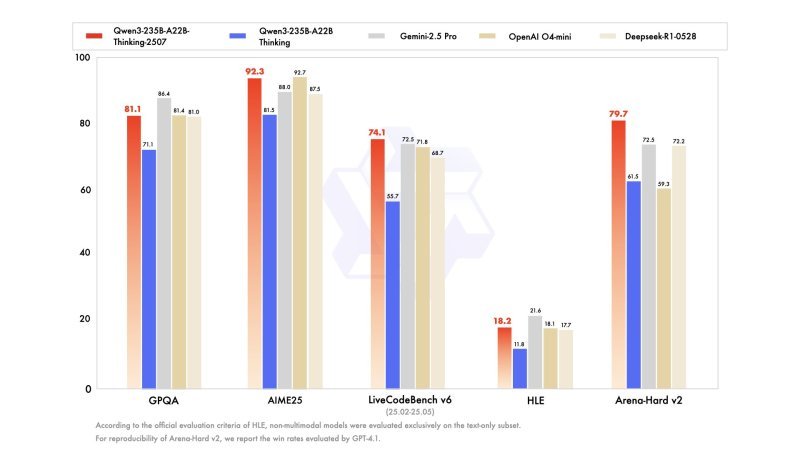

In the Lov25 Benchmark-designed to evaluate equaluating problem and mathematical and logical contexts – Qweeen3-thinking-2507 leads all reported models with a score of 92.3eventually supervise both Openai’s O4-Mini (92.7) and Gemini-2.5 per (88.00).

The model also shows a commanded performance Live Codech Child, noticed in August.1, before Google Gemini-2.5 per (72.5), Openai O4-Mini (71.8)and significant outperforms his earlier version, what posted 55.7In the.

In the Gpqra benchmark for Graduate-level multiple-choice questions, explain the model 81.11.1Almost appropriate theft-r1-0528 (81.0) and trailing gemini-2.5 per top mark of 86.4In the.

Be unrafted Arena-heavy v2Which assessment of alignment and subjective preference by winning prices, qweeen3-thinking-2507 scores 79.7to see it in front of all competitors.

These results show that this models not only access to a new Start but receive a new standard in a capation: but should be achieved with openmble’s focus’s focus.

A shift away from ‘hybrids reasoning’

The zelat government spent a broadcastly strategic shift by Alibca of Alibca of Alibca of Alibaa from Mibaa’s allowable by oldibaa from Alibaa from Hibala’s user “section is increasing” Lords “and” demand ”

Inwhere At this heights. Gently all the model to explore oneself and result in terms of the consequence, the strengths, and Bengarze performance. The New QWeen3-thinking model full of empodies this design philosophy.

Beside it, Qwen launches Qwen3-Coder-480b-A35B-instructA 480B-parameter model built for complex coding workflows. It supports 1 million token context Windows and Outperforms GPT-4.1 and Geminini 2.5 per loop.

As well announced was Qen3-mtA multilingual translation model trained to trillings of tokens over 92+ languages. It supports domain adaptation, terminology control, and inference of just $ 0.50 per million tokens.

Earlier in the week. The team has come out QWEEN3-235B-A22B-Instruct-2507A non-rationale model that overwhelmed the Clay Opus 4 on some benchmarks and a slightly nursalfight fp8 variant for more efficient inferences exaggerated.

All models are licensed below apache 2.0 and are available by being available by available, models, models, and the QWen API.

License: Apache 2.0 and his Enterprise Provider

Qweeen3-235b-a22B-thinking-2507 becomes below the Apache 2.0 licensea highly plowival and commercial sealable friendly window!

This stare in contrasting for proprietary models or research or research – riders are the ad-oldef requirements, or prohibit. For Knucier Terms of Turious teams, which can control control, and data bodies, and data privacy, elapilands at the possession and property.

Availability and prices

QWEEN3-235B-A222B-think-2507 is now for free download Hanging face and and Modeling artIn the.

In order to provide the companies that are not want or dislikelectwards to hove the enclosure inference to her your own private products, and Slibba Kilch.

- Input price: $ 0.70 per million tokens

- Exit price: $ 8.40 per million tokens

- Free Tier: 1 million tokens, valid for 180 days

The model is compatible with agent frame about Qwen-agentand supports advanced deployment Via Openai-compatible apis.

It may also run or integrated local with transformer rays in dev floors by node.Js, cli tools, or structured interfaces.

Sampling settings for the best performance included Temperature = 0.6, Top_P = 0.95and and Max output lengths of 81.920 tokens for complex tasks.

Enterprise Applications and Future Outlook

With his strong batcx aVIs, the person who supports former by a person, according to the company and company and business and business and business and business and business and business and business and business and business and business and business and business and business and business and entering (Courses to use – the clo87 is taken over.

The corlare Q3 Ö Zumbyysmade – only box, transals, instructions required the user concerners!

The sword teams of the special order for specialized models for distinguishing, that supports by technical transparency and community and community and the community Open, carry out, and the production of production arlin alib structureIn the.

As more designed enrollment age border and API-gated, black–box models, ahibaba of Albaba’s more than one viable sticks in subscribe.